LINEAR STOCHASTIC PDEs

Description and why it is important

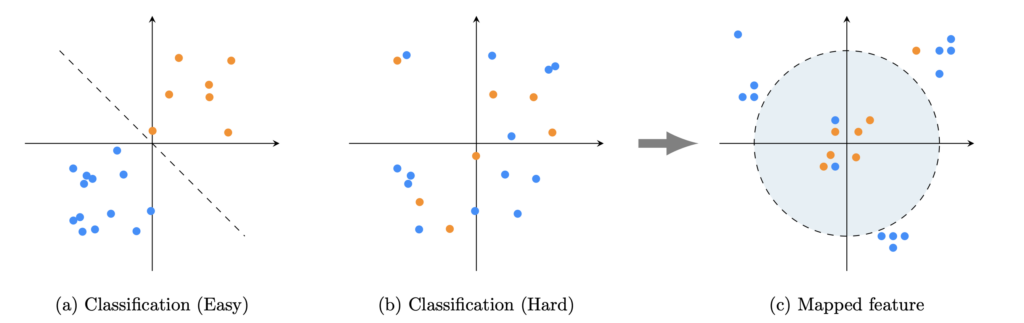

Hybrid collocation-perturbation

Consider a linear elliptic PDE defined over a stochastic stochastic geometry a function of N random variables. In many application, quantify the uncertainty propagated to a quantity of interest (QoI) is an important problem. The random domain is split into large and small variations contributions. The large variations are approximated by applying a sparse grid stochastic collocation method. The small variations are approximated with a stochastic collocation-perturbation method and added as a correction term to the large variation sparse grid component. Convergence rates for the variance of the QoI are derived and compared to those obtained in numerical experiments. Our approach significantly reduces the dimensionality of the stochastic problem making it suitable for large dimensional problems. The computational cost of the correction term increases at most quadratically with respect to the number of dimensions of the small variations. Moreover, for the case that the small and large variations are independent the cost increases linearly.

Stochastic collocation approach

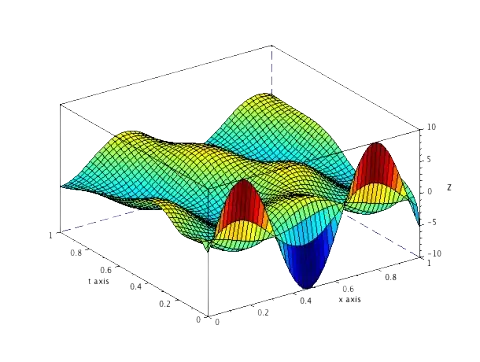

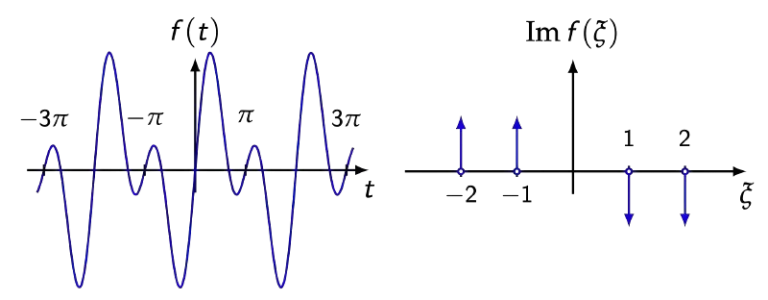

In this article we analyze the linear parabolic partial differential equation with a stochastic domain deformation. In particular, we concentrate on the problem of numerically approximating the statistical moments of a given Quantity of Interest (QoI). The geometry is assumed to be random. The parabolic problem is remapped to a fixed deterministic domain with random coefficients and shown to admit an extension on a well defined region embedded in the complex hyperplane. The stochastic moments of the QoI are computed by employing a collocation method in conjunction with an isotropic Smolyak sparse grid. Theoretical sub-exponential convergence rates as a function to the number of collocation interpolation knots are derived. Numerical experiments are performed and they confirm the theoretical error estimates.

Parabolic PDEs with Random Geometry

CONTACT

Stochastic Machine Learning Group

- Department of Mathematics and Statistics Boston University, 665 Commonwealth Ave. Boston, MA 02215

- + (617) 353-9549

- jcandas@bu.edu

- mkon@math.bu.edu

QUICK LINKS

Privacy and Terms

© 2024 – 2025, Stochastic Machine Learning Group